Prompt-based — The birth of a new human-machine interaction model

March 18, 2024

The ways humans interact with technology has evolved significantly over the decades — and it’s still constantly evolving. The rise of Artificial Intelligence (AI) and natural language processing (NPL) has brought to light a new way of interaction — prompts.

How we got here

In the early days interaction with computers was text-based. Users typed specific commands on a keyboard to perform tasks. Command-line interfaces (CLI) restricted computer use to experienced users. Today this type of interaction is reserved to software developers.

DEC VT100 terminal Source: Wikipedia

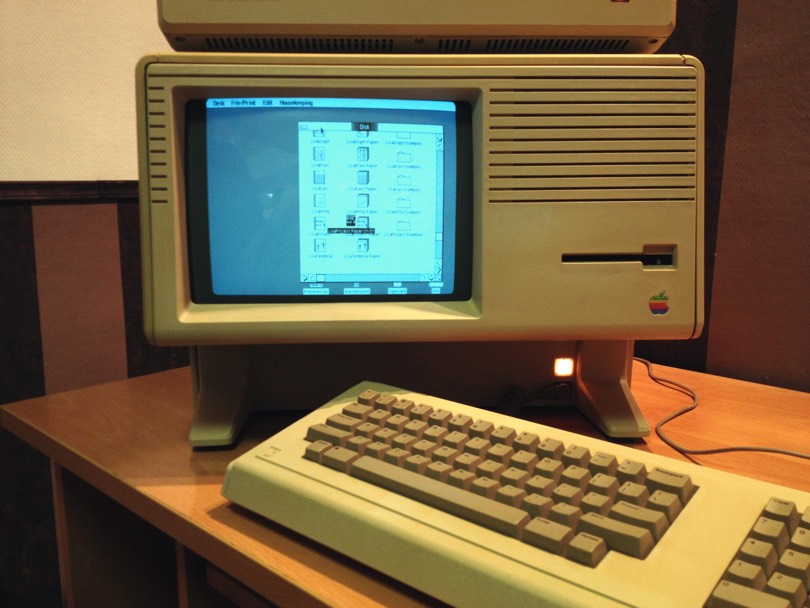

Graphical interfaces developed during the 1970s and 1980s (with Xerox PARC, Apple’s Lisa and Macintosh) were a big step towards making computers accessible to a larger number of people. Users were then able to interact with a computer system through visual indicators or representations (graphics) such as icons, windows or menus. Besides a simple keyboard, they started having pointing devices (i.e. mouse) to operate the interface.

Apple Lisa (1983) Source: Wikipedia

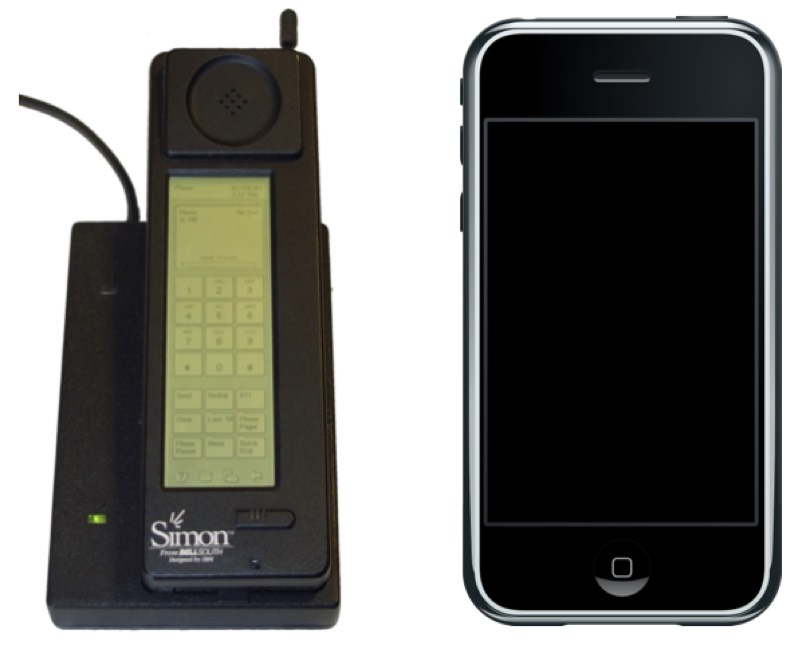

The next big revolution happened in the early 00s with the popularisation of the touchscreen technology following the release of the Apple’s first iPhone. With it a more natural way of interacting with machines. From tapping, to pinching or swiping — a number of actions are now possible.

IBM Simon (1994) Source: Wikipedia | 1st generation iPhone (2007) Source: Wikipedia

After touch-based interaction we moved to voice-based. Voice started to become a form of interaction in 1997 with the speech recognition software, Dragon NaturallySpeaking. However it has only become a widespread form of computing interaction fairly recently with the debut of voice assistants like Apple’s Siri, Amazon’s Alexa and Google Assistant. We can now use our voice to create and edit documents, launch applications, open files, ask questions.

Echo Dot (2022) Source: Amazon

More recently a new form of interaction has been under development, one that is gesture-based. Technologies like Leap Motion and Microsoft Kinect eliminate the need for direct physical contact. Systems have cameras and sensors that detect hand and body movements, or even facial expressions, and interpret them as input (using machine learning algorithms). The gaming industry is leveraging it to allow players a more immersive experience into virtual worlds. Although gesture-based interactions are here to stay, they still haven’t become as mainstream as touch-based ones, as accuracy and responsiveness still need some improvements.

Gesture-based interaction applied to the automotive industry

All these forms of interaction have one thing in common. They require us to talk to machines in their language, having to learn complex syntax and commands to get tasks done. We’re now witnessing a new way of interacting with machines that simply requires a conversation — prompting.

Understanding prompting

Rather than complicated command lines or finding our way into icons and menus of user interfaces, this new form allows us to interact with machines by simply using the language we’re familiar with.

In all started back in 2020 when OpenAI made a significant advancement within AI with the launch of ChatGPT-3. This system is capable of processing and generating language in more complex ways than previous endeavours. You can ask for a vacation itinerary, write a short story, a blog post, rephrase or summarise texts, write jokes, have conversations about pretty much any topic. And as if text wasn’t enough, they then turned to the generation of images from text inputs — DALL-E. Meanwhile, other competitors have entered the scene — Midjourney, Stable Diffusion, Imagen, etc. When we have text and image, what’s next? Video, of course. Sora promises to be OpenAI’s next breakthrough. A user’s text prompt will create realistic and imaginative videos (up to a minute long for now).

What is a prompt?

A prompt is a query or input given to an AI system to guide its response or generate a specific output.

Well-crafted prompts will steer an AI model towards the specific output we want to get. The quality of the prompts will determine the quality of the response/outcome.

Better prompts > Better outputs

If we know how to craft the right questions or instructions to guide AI models, especially Large Language Models (LLM), we will obtain more accurate, relevant and useful outputs.

An effective prompt allows us to fully use the capabilities of generative AI. This can be achieved by providing as much context as possible, and use specific and detailed language.

We’ve used Playground, a free-to-use AI image creator, to generate the following images. A prompt such as “generate a landscape” will produce a generic result (image on the left). However, if we refine it and include more precise instructions regarding features, shapes, textures, patterns and aesthetic styles we’ll likely yield a better result. For the second image we used the prompt — “generate a serene landscape with mountains on the background, a calm blue lake in the foreground, a radiant sun, an italian-like villa on the lake shore” (on the right).

AI-generated images using Playground

Quality of input data can influence the model’s capability to give specific and clear outputs, thus helping to reduce ambiguity and inaccurate results.

A career and skill of the future?

As AI generator tools make their way into our everyday lives, we wonder what the role of prompting will be in the near future and in the longer term. Most experts claim prompting will likely become a requested and valuable skill to work in industries where getting results from AI generators can increase productivity (UX design being one of those). In fact, prompt engineering has already been emerging as a new role in IT. Prompt engineers are being hired (and making good money) to get AI systems to produce exactly what they want and unlock their full potencial. However, there are experts who suggest “AI systems will get more intuitive and adept at understanding natural language, reducing the need for meticulously engineered prompts”.

For someone who doesn’t have much prompting experience or deep knowledge of AI, there are already ways to improve prompts. ChatGPT-4 Prompt Perfect Plugin automatically rewrites your input to get more precise and clear results, by typing “perfect” at the end of the prompt. Other AI generators have similar features.

As an example, we played around with Playground and typed the prompt:

“AI revolution. Use the painting Liberty Leading the People by Eugène Delacroix as inspiration”

We also uploaded the actual image of the painting to be used as inspiration, and then abled the toggle “Expand Prompt”. It generated an image with the following prompt:

“Al revolution, inspired by ‘Liberty Leading the People’ by Eugène Delacroix, featuring robots and technology amidst a modern barricade, symbolic figures in the forefront representing different aspects of Al, human-like robots with expressions of determination, the iconic flag replaced with a circuitry-patterned standard, backdrop of a dystopian cityscape, chiaroscuro contrasts, smoke from burning tech debris, digital painting, ultra realistic, dramatic lighting.”

A less than two lines prompt was expanded into a far more detailed one. Drawing on it, we could then make the adjustments we considered more convenient for our end goal. Far easier than typing it all from the beginning. The image we got is illustrating the following section.

A revolution under way

AI-generated image (Playground)

The field of generative AI continues to progress at an impressive pace, and with it a unique set of challenges and opportunities arise.

If generating lifelike video content becomes easier for everyone who knows how to craft a good prompt, there’s the risk of inadvertently creating videos that infringe existing copyrights and pose ethical questions. The potencial creation of deepfake videos might lead to misinformation and disinformation (particularly in politics), as we struggle to distinguishing fake from real.

Lately we’ve been hearing reports of LLMs generating false information and conveying it as if it were true — the so called AI hallucinations. This can have significant consequences when AI is being applied in healthcare, security, and data management, so we must carefully watch out for them.

We also start to question the future of websites and apps. When we ask an AI model to summarise a paper we have to study for a test, create an image so we can use it on a design, or give us a list of the best restaurants in the neighbourhood, we’re using it as a tool to gather and present us knowledge (that we might or not consider reliable). However, LLMs might have the ability to use the tools themselves. So besides asking them for a travel itinerary for our next summer break, we can ask them to book flights, an hotel, a transfer and tickets for the main attractions. We can even refine our query and define we wish to arrive in the morning, stay at modern-look, 4-star hotel, all within your budget. You would then be able to confirm the options you’re given or make the necessary changes. In this case we would eliminate the need to go to all these different websites individually — search aggregators, travel agencies, airlines, museums, etc. All would be given to us upon an effective conversation with the AI model. Ultimately removing the need for apps and websites. Businesses would simply feed LLMs with the information they normally presented in their website or app.

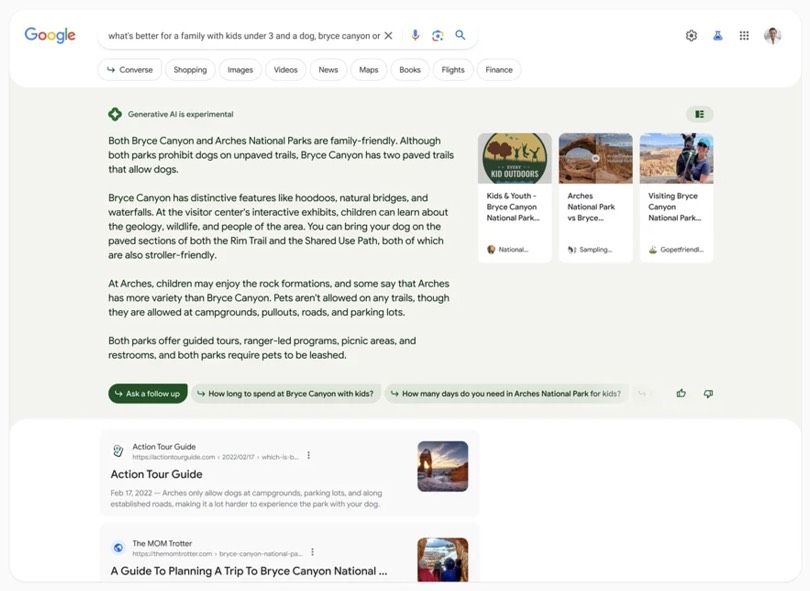

While this is still just a possibility, right now businesses are starting to worry about how Google’s Search Generative Experience can prevent users from visiting their websites. No doubt SGE will revolution search engine history. Instead of the typical list of blue hyperlinks we get as a search result, we’ll be presented with a few text paragraphs generated by AI with key information, as well as a few links leading to additional information.

Example of how search results are presented with SGE. Presented in May 2023, it’s still an experiment, and only available in the US. Source: Google

While we can’t help but wonder about the challenges rapid AI developments will present us, we also can’t overlook the opportunities it can bring.

There’s certainly potencial for making technology more accessible, intuitive and human-centric. AI will revolutionise the landscape of a number of industries - education, healthcare, marketing, automobile, sales manufacturing, etc. While businesses can leverage AI to develop a new generation of products and services, boost their sales, and improve customer service, people can benefit from having an easier access to education and training, improved health care, safer transportation, and tailored products and services.

What does it mean for UX designers?

For better or worse, the way humans, machines, and data interact is going through a major change. AI will certainly change the way we design and develop web content in the following years. UX designers are at an unique position to help designing AI in such a way that it collaborates with humans, rather than replaces them. While AI can become a powerful tool to streamline design work, helping UX pros creating more personalised and effective user experiences, AI interfaces will also need UX designers to become more user-friendly.

Related Articles

-

Prompt-based — The birth of a new human-machine interaction model

The ways humans interact with technology has evolved significantly over the decades — and it’s still constantly evolving. The rise of Artificial Intelligence (AI) and natural language processing (NPL) has brought to light a new way of interaction — prompts.

-

A glimpse into the future — Here’s the UX design trends we expect to dominate 2024

Emerging technologies and tools constantly influence the way people use the Internet and interact with digital products. And as user behaviours and preferences evolve, designers must keep up with new tools and solutions to deliver interfaces and user experiences that cater the needs of an ever-demanding audience.

-

How can insurance companies make their digital products more accessible?

Millions of people who live with a disability struggle to access important information online because websites and apps are built with major content and technological barriers. And insurance websites are not an exception.

-

Barrier-free banking - From branches to mobile apps accessible for all

In the banking and financial industry, accessibility is about empowering everyone, including people with disabilities and the elderly, to enjoy bank's products, services and facilities, by making them convenient and easy to use.

-

Design for a better world - How working together and applying design approaches is improving people's lives

9 November is World Usability Day 2023. This year's theme is Collaboration and Cooperation, which intents to focus on how we can work together to create solutions, both globally and locally, to solve the world's biggest problems.

-

Be an Agent of Change - Check these resources to help you build more ethical designs

The role of today's designer goes far beyond simply creating beautiful interfaces and experiences. You can no longer design without considering the consequences of how what you're creating impacts individuals, society and the world.

-

E-commerce and Accessibility - Creating an inclusive online shopping experience

Now it’s the time for online stores to improve their website accessibility and ensure they offer an inclusive experience for everyone.

-

The future is today — How can we leverage AI to improve our UX Design work

AI has now become a big part of several areas of our lives, and UX Design is no exception. It’s actually becoming more and more applicable to the UX design process.

-

Accessibility Compliance App - by Xperienz. A useful tool when fixing accessibility errors

To simplify the presentation of the accessibility evaluation of websites, Xperienz has created the Accessibility Compliance App. We start by doing a content inventory in which we collect all the pages of the site. Then we evaluate each page and list all the aspects that need to be fixed.

-

What does the UX future hold? - Here's the UX Design trends we expect to dominate 2023

Businesses must stay up to date on emerging user experience and interface trends so we've selected 7 top trends that are already making, and will certainly continue to make, an impact on website and app development.

-

Raising Awareness for Web Accessibility [Infographic] — International Day of Persons with Disabilities

Last December 3 we celebrated the International Day of Persons with Disabilities. To help promote a more accessible Web we’ve put together an easy-to-digest infographic about Web Accessibility.

-

Why hiring external UX services even when you have an in-house UX team?

Even if you have an in-house UX design team, there might be times when additional resources and professional know-how can be useful. Bringing in an external UX team might be exactly what you need for your company to excel in all projects.

-

Health and UX: when design has a life-saving potential

A good experience with healthcare technology and services, that is both useful, accessible and reliable, can make a huge different in improving peoples’ well-being, as well as the work of healthcare professionals.

-

Trust — Breaking or Building it Through Design

Trust is more valuable now than ever. 68% say trusting a brand they buy or use is more important today than in the past (Edelman, 2019). We live in an ever-growing digitalised world, where we increasingly interact and transact online. At the same time we constantly crave for trust-based interactions in digital environments. Questions like "Will the personal data I provide here be misused?", " Will my email be used to spam me incessantly?" or "Do I really want to share my bank details to a website I've never heard about?" have certainly come to our mind more than once.

-

Innovation Sprint — Exploring and validating a business direction in 2 to 4 weeks

During an Innovation Sprint, a new method created by Xperienz, the Sprint team joins efforts with the organisation team and in 2 weeks (minimum) they identify three strategic business directions, taking into account the organisation’s current state and structure. These innovative ideas are prototyped and tested and in the end the organisation will know the best path to follow.

-

Creating accessible digital experiences

Accessibility is of major importance for organisations who deliver web products and tools. Accessibility issues can affect not only a website’s usability for people who have disabilities but also for those who don’t. By offering accessible products, organisations will show they are inclusive, reach a wider market, be legally compliant, and offer a better user experience. For everyone.

-

Quick & Dirty User Research

Tight timescales and budgets are no excuses to ditch user research altogether, specially when we all know it’s essential to make sure you deliver easy-to-use products. Quick and dirty research is a great way to get user insights fast and on a budget.

-

10 Bad User Research Practices You Will Want to Avoid

Some might think user research is as simple as watching people perform a few tasks on a website or asking them a few questions, but user research is definitely not walk in the park. Let’s go through some of the mistakes that can arise when planning and conducting research.

-

Responsive Illustrations

Can the same illustration be used the same way on a desktop screen, on a tablet or on a smartphone? How is it possible to make them look great on every screen without losing quality or the idea the brand is trying to convey?

-

UXLx Masters — Wrap-up

From 10 to 13 February attendees from 25 countries and 14 world-renowned UX experts joined online for 3 days of learning. The programme included 12 live masterclasses, 2 keynotes, 2 live podcasts, and more.

-

The Design Role in Digital Transformation

As the world keeps evolving and digital becomes more crucial to our everyday life, companies are feeling pressured to keep up and level up their game.

-

Why We Need Parametric UI Design Tools

In Design, parametric refers to a process based on algorithmic thinking that uses parameters and their interrelations to define a geometric form (which can be buttons, containers, panels, etc.).